Unlocking the Power of Generative AI with LangChain: Integrating a Vector Database for Natural Language Question Answering

Introduction to Power of Generative AI :

In the world of artificial intelligence, natural language understanding and question answering have become essential components for organizations seeking to harness the power of their data. LangChain offers a unique orchestration framework that allows you to seamlessly integrate a vector database with OpenAI’s Language Model (LLM) to answer questions in natural language. This blog will provide a comprehensive overview of how to harness the potential of this approach to enhance your generative AI services.

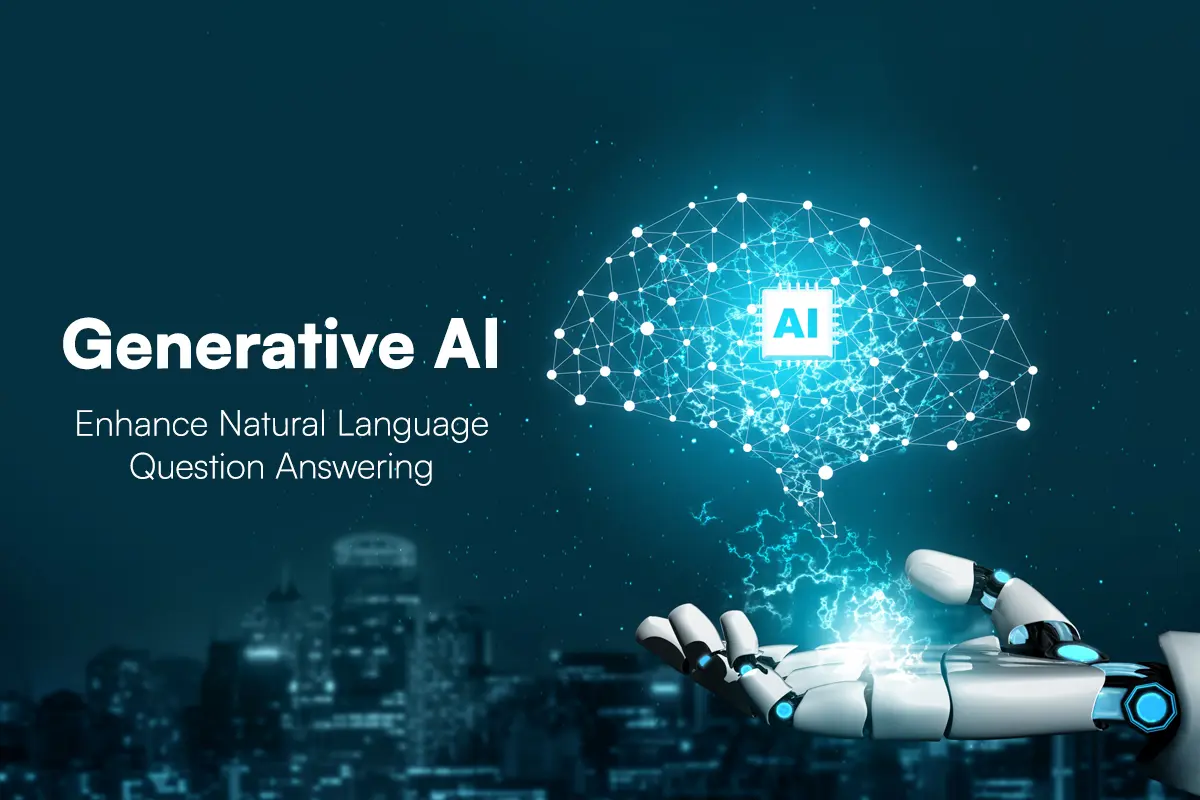

The Core Functionality:

Let’s dive into how this integration works to enable you to chat with your data effectively:

User Asks a Question:

It all begins with a user asking a question. The power of generative AI lies in its ability to provide answers to questions in a conversational manner.

Embedding the Question with OpenAI:

Once the user’s question is posed, it is embedded using OpenAI. OpenAI’s models are renowned for their natural language understanding capabilities.

Sending the Question Vector to the Vector Database:

The embedded question is then sent to the vector database. This database contains documents with similar vectors to the question, as vectors represent the meaning of the text.

Retrieving Relevant Documents:

The vector database retrieves documents with similar vectors, and these documents are considered relevant to the user’s question. This step is crucial as it ensures that the content retrieved is contextually appropriate.

Creating a Contextual Information String:

The text of all documents with their relevant metadata is concatenated into a single string. This string, along with the user’s original question, is then sent to the Language Model.

Instructing the Language Model:

The Language Model (LLM) is instructed to answer the user’s question based on the provided context. This instruction is vital in ensuring that the LLM understands the context within which it should provide an answer.

Generating an Answer:

The LLM processes the provided information, considers the context, and generates a natural language response to the user’s question.

Presenting the Answer:

Finally, the answer generated by the LLM is presented to the user. The user can now interact with the data in a conversational manner, all while keeping the data private and secure.

Privacy and Security:

It’s important to note that in this process, only encrypted APIs are used to communicate with different systems. This means that your proprietary data remains private and secure throughout the entire interaction. The integration of LangChain, OpenAI, and the vector database ensures that sensitive information is protected at every step of the way.

Conclusion:

Integrating a vector database with OpenAI’s Language Model using LangChain offers a powerful solution for natural language question answering. This approach allows organizations to harness the full potential of their generative AI services, all while maintaining data privacy and security. By following this orchestration framework, you can take the next step in leveraging your data effectively, making it an invaluable asset for your organization’s growth and success.