A Deep Dive into Stable Diffusion and ControlNet for Effortless Pose-Controlled Image Generation

Introduction to Image Generation with Stable Diffusion and ControlNet

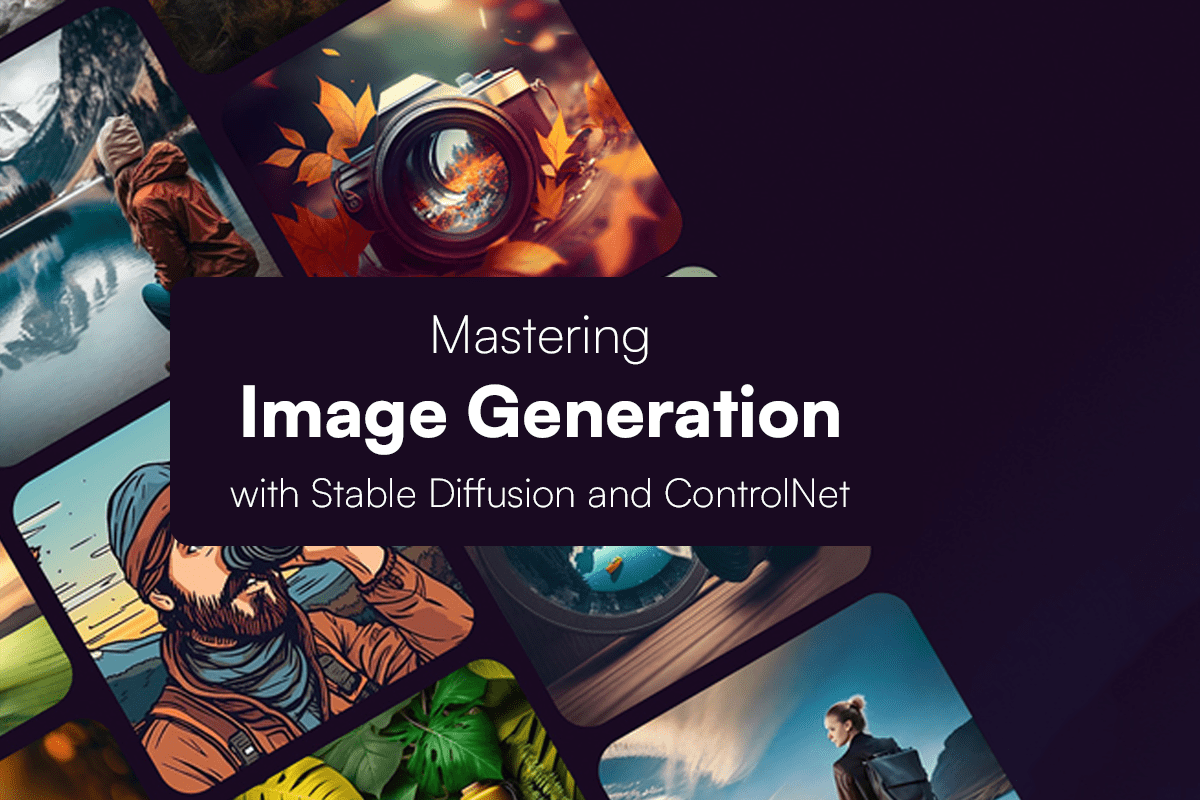

From a user’s standpoint, the advent of Generative AI technologies like Stable Diffusion opens up thrilling possibilities for seamless creativity. It allows individuals to effortlessly craft visually captivating and varied content with minimal input. The user-friendly applications of this technology empower users to delve into novel realms of artistic expression, design, and storytelling, ushering in an era where sophisticated generative tools are easily accessible.

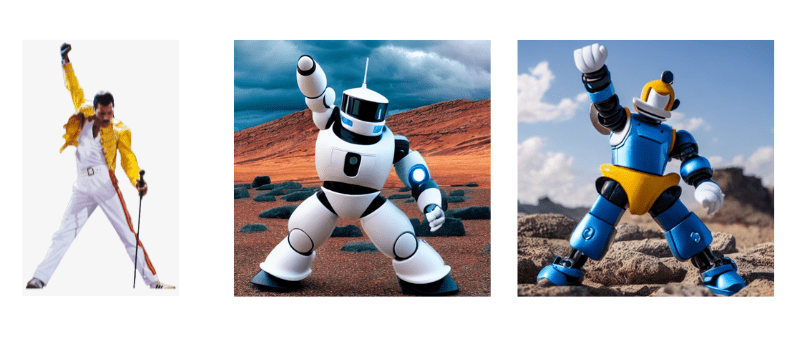

In the context of controlling Images Generated by Stable Diffusion, particularly in replicating the iconic pose of Freddie Mercury from the 1980s, ControlNet serves as a valuable tool. ControlNet is designed as an adapter that introduces spatial conditioning controls to Stable Diffusion. These adapters function as supplementary models fine-tuned on top of expansive text-to-image models like Stable Diffusion. This approach ensures that users can assert precise control over the generation process, offering a unique and personalized touch to the output while avoiding any concerns related to plagiarism.

Prerequisites

- You need to have a google account. This will be used to access Google Colab.

- Have familiarity with Generative AI and Stable diffusion. Check out this amazing post to get a high level look on the workings of Stable diffusion.

- Have working knowledge of the HuggingFace Diffusers library and know what pipelines are in Diffusers. Check this out to get familiar with the Diffusers library and this is a great tutorial on AutoPipelines – a fantastic feature of the Diffusers library

Upon meeting the specified requirements, you can commence the process. Begin by launching Google Colab and initiating a new notebook. Navigate to the “Runtime” tab, proceed to “Change Runtime Type,” and opt for the T4 GPU from the available options. This sequence of steps ensures that you are well-equipped to proceed with your tasks efficiently. This will be the working environment for our demo.

Installing Required Libraries

Before we begin, make sure the following libraries are installed.

Generating Human Pose Estimation of the Control Image

To initiate the process, our initial step involves extracting the human pose estimation (HPE) from the Freddie Mercury image. This HPE will serve as a conditioning image, compelling the model to generate an image mirroring the exact pose. For a comprehensive understanding of human pose estimation, refer to a provided post. In essence, the HPE image is a skeletal representation of the human body, pinpointing essential features such as limbs and joints.

Constructing a Stable Diffusion Pipeline

Now we will create our image generation pipeline using StableDiffusionControlNetPipeline, where we will pass our ControlNet model into. For this case, we have a ControlNet model pre-trained on human poses available for use. We will use Stable Diffusion v1.5 as this is the model ControlNet is trained on.

Note: make sure to add .to(“cuda”) to make sure the pipeline runs on GPU.

Passing in the Prompt

Now we are ready to generate our custom image. First to make sure we do not get bad quality images we add a set of negative prompts. Now, the objective is to produce an image depicting a robot standing in an isolated and barren setting. Let’s pass this prompt in along with our control pose image and see the results. We will ask it to generate 2 images for this prompt.

Fine Tuning our Results Further

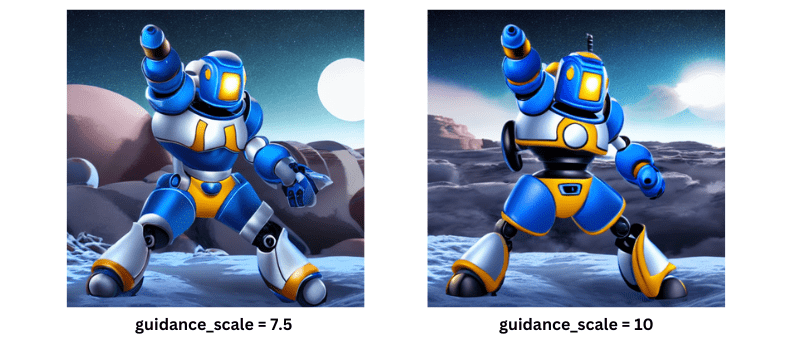

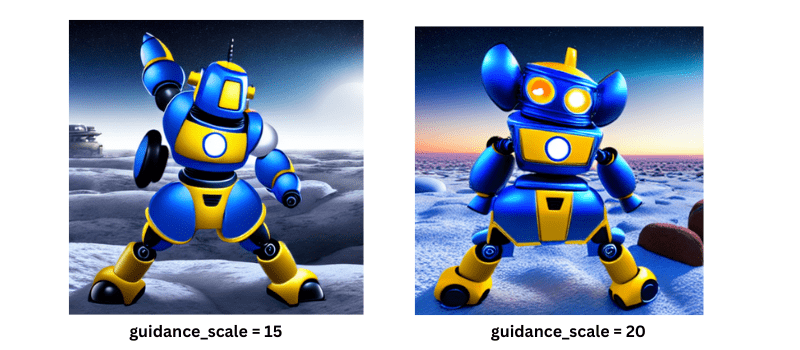

We can further tune the image output by changing the values of the hyperparameters like num_inference_steps and guidance_scale. But our pipeline produces a different image every time it runs, thus we require a reproducible pipeline for this case. This can be accomplished by passing a generator seed to our pipeline.

Let’s change the value of guidance_scale and see the difference in the images.

A higher value for the guidance scale prompts the model to create images more closely aligned with the provided text prompt, but this comes at the cost of diminished image quality. Next, you should experiment by

changing num_inference_steps to different values and see what results you get.

Conclusion

Conclusively, the integration of Stable Diffusion and ControlNet has democratized the manipulation of poses in digital images, granting creators unparalleled precision and adaptability. Its use cases span industries like fashion and film where it can help in making virtual designs with precise pose control, to casual users online helping them create virtual avatars in any pose they can imagine.