Product Development

Access Web data Using python

- Raj Patel

- April 12, 2023

Introduction to Access Web data Using python

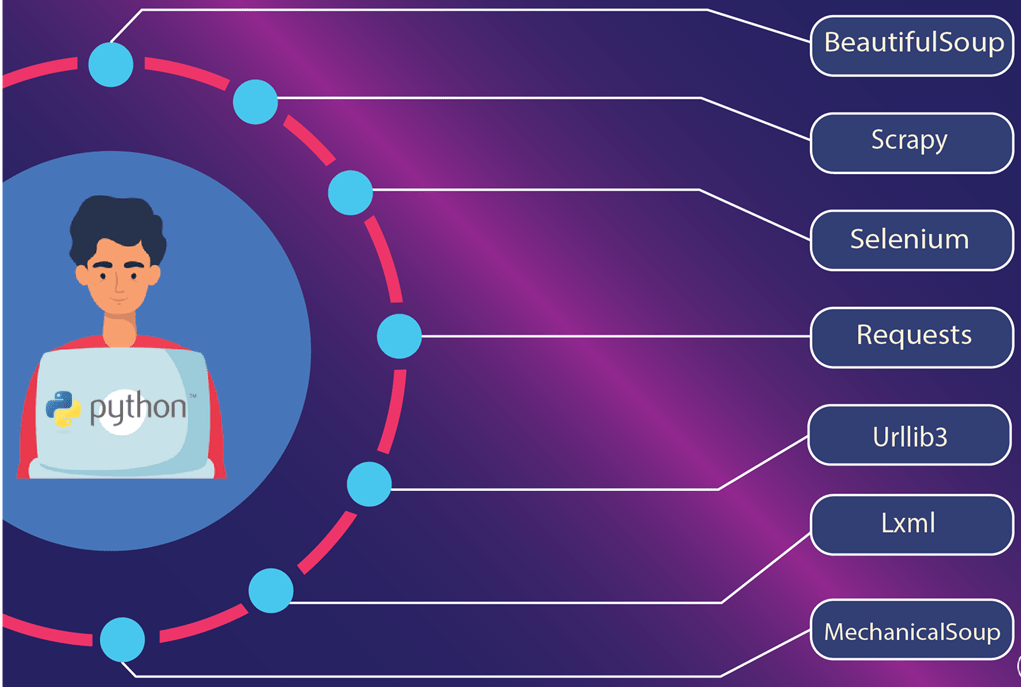

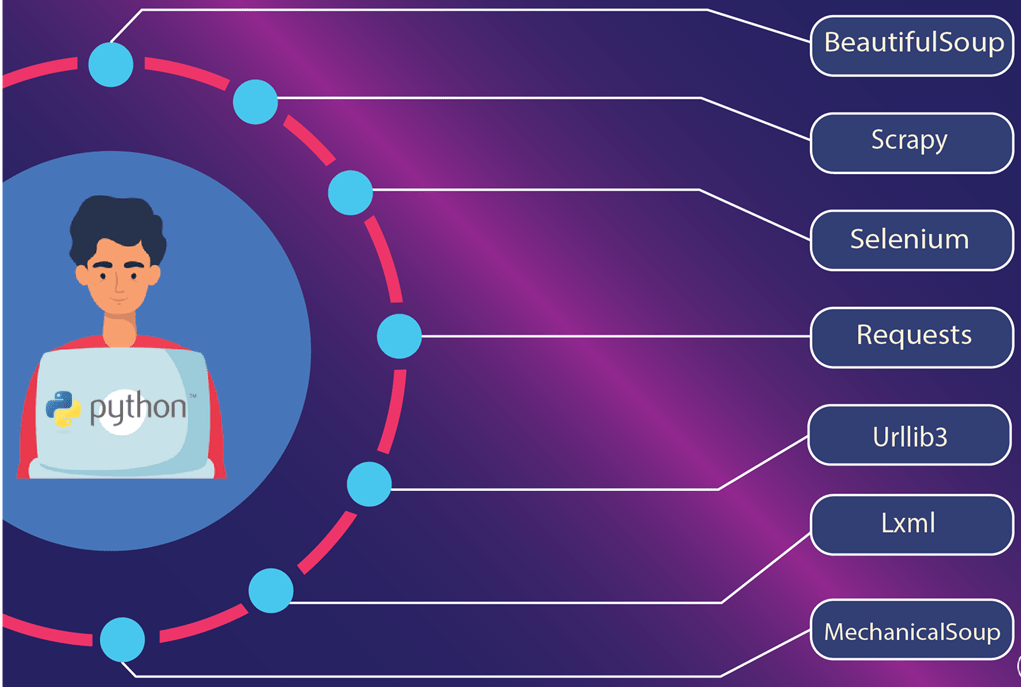

Python is very useful for accessing web data. Here we explain how we can extract the website data and work on that. The regular expression, Beautiful Soup, and urllib are used to get the data from websites.

Regular Expression

We can search any substring from a string, convert the string into a list, and we can count the number of a specific word from text or files.import re x = open(‘./filename’).read() #x=”12fijjhk2j5jnj2k9″ y = re.findall(‘[0-9]+’, x) #y=[’12’, ‘2’, ‘5’, ‘2’, ‘9’] sum = 0 for dt in y: sum = sum + int(dt) print(sum) #sum=12+2+5+2+9=30Get the integer numbers from the file and calculate the sum of all numbers.

Beautiful Soup

It is a Python library to parse data from HTML, and XML. We can also abstract the data from any website using a URL.Go to cmd or terminal and use the below command to install the required packages/modules.

- pip install bs4

- pip install requests

- pip install html5lib

In the above example, we are getting the links of techifysolutions.com. Using the requests module, we get the URL content. This content is passed in Beautiful Soup for parsing HTML. find all anchor tags in this Beautiful Soup object.

The output of the above code looks like

- https://techifysolutions.com/services/product-development/

- https://techifysolutions.com/services/mobility

- https://techifysolutions.com/services/cloud-advisory-consulting

- https://techifysolutions.com/services/devops-automation

- https://techifysolutions.com/services/product-application-ui-ux

We can also find HTML div, paragraph, img tags.

Get JSON data from the URL

When we visit https://jsonplaceholder.typicode.com/todos/1 we find the below object.{ “userId”: 1, “id”: 1, “title”: “delectus aut autem”, “completed”: false }

If I want to access a particular field from the above object, we can use urllib method.

import urllib.request, urllib.error

import json url = ‘https://jsonplaceholder.typicode.com/todos/1’

uh = urllib.request.urlopen(url)

data = uh.read().decode()

data_obj = json.loads(data)

title = data_obj[‘title’]

print(title)

Can The output is delectus aut autem. In the above example, we are trying to read the data using the urllib python module and converting data from JSON to python dict object, and printing the title from that data. Get latitude and longitude from location: import urllib.request, urllib.parse, urllib.error import json import ssl api_key = False if api_key is False: api_key = 42 serviceurl = ‘http://py4e-data.dr-chuck.net/json?’ else: serviceurl = ‘https://maps.googleapis.com/maps/api/geocode/json?’ while True: address = input(‘Enter location: ‘) if len(address) < 1: break parms = dict() parms[‘address’] = address if api_key is not False: parms[‘key’] = api_key url = serviceurl + urllib.parse.urlencode(parms) print(‘Retrieving’, url) uh = urllib.request.urlopen(url<) data = uh.read().decode() print(‘Retrieved’, len(data), ‘characters’) try: js = json.loads(data) except: js = None if not js or ‘status’ not in js or js[‘status’] != ‘OK’: print(‘==== Failure To Retrieve ====’) print(data) continue lat = js[‘results’][0][‘geometry’][‘location’][‘lat’] lng = js[‘results’][0][‘geometry’][‘location’][‘lng’] print(‘lat’, lat, ‘lng’, lng) location = js[‘results’][0][‘formatted_address’] print(location) print(‘place id is ‘) print(js[‘results’][0][‘place_id’]) The output for longitude and latitude for Techify Solutions Pvt Ltd looks like this lat 23.0387692 lng 72.50463359999999 716 BSquare 3, Sindhubhavan Marg, near Tradebulls, Bodakdev, Ahmedabad, Gujarat 380054, India place id is ChIJRczhcdWEXjkRsfgJcSlI7nU In the above example, we are trying to get the latitude and longitude of a particular location. We used APIs to retrieve data for a specific location.

We are trying to get the location from the user and use API to search location data. using urllib library we call this API with location as a parameter and get the result data. From this data, we are getting the place address, place id, place latitude, and longitude.

Summary

If you have large data on many websites and you want to get that data very quickly then if you visit every website and get data, it takes a very long time and effort. So, to quickly get large data, we used web scraping.